Projects

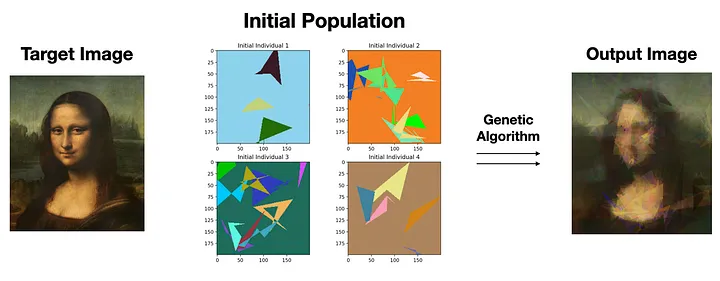

Genetic Algorithm for Image Recreation

Developed a genetic algorithm in Python using Pygame to recreate target images. Successfully approximated the Mona Lisa by evolving polygon-based visuals, optimized using a human-perception-based color metric.

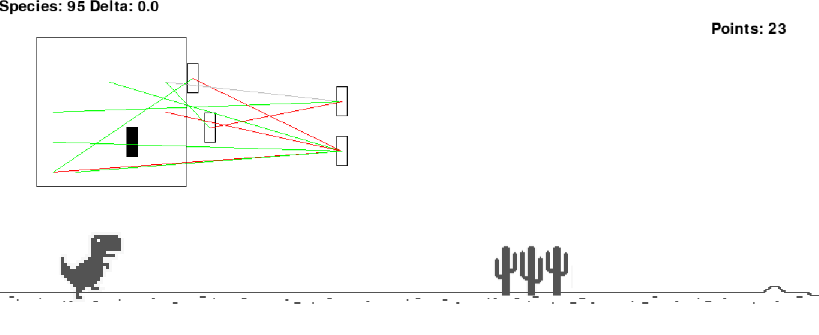

Neuroevolution of Augmenting Topologies

Implemented the NEAT algorithm to evolve neural networks capable of playing the Chrome Dinosaur Game. The system trained agents to learn jumping and ducking behavior purely through evolutionary strategies, without any hardcoded rules. After several generations, the best-performing network was able to achieve a score of over 10,000, effectively beating the game.

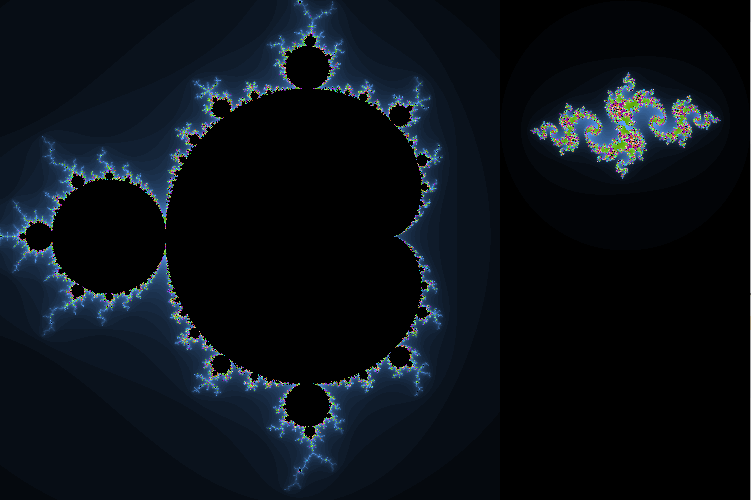

Mandelbrot & Julia Set Visualiser

Created a real-time Mandelbrot and Julia set visualizer using C++ and the SDL2 library. The Julia set dynamically updates based on the mouse position, allowing users to interactively explore both fractals and zoom in almost indefinitely, revealing their intricate self-similarity and complexity.